Analyze queries with EXPLAIN

Use EXPLAIN and EXPLAIN ANALYZE to understand how the query planner has decided to execute a query and view actual runtime performance statistics. These commands provide valuable insights into how YugabyteDB processes a query and by analyzing this output, you can identify potential bottlenecks, such as inefficient index usage, excessive sorting or inefficient join strategy, and other performance issues. This information can guide you to optimize queries, create appropriate indexes, or restructure the underlying data to improve query performance.

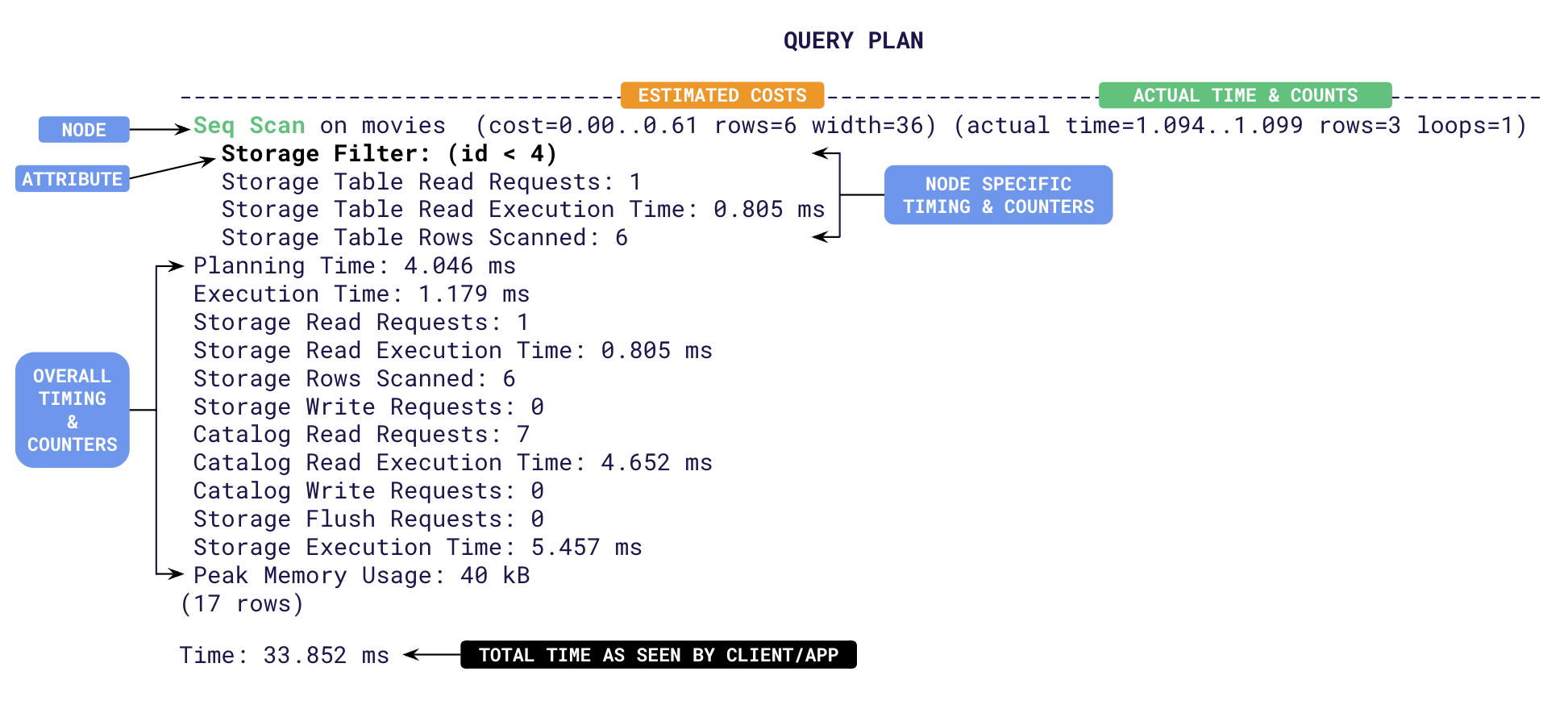

Anatomy of the output

The output is effectively the plan tree followed by a summary of timing and counters. Each line represents a step (a.k.a node) involved in processing the query. The top line shows the overall plan and its estimated cost. Subsequent indented lines detail sub-steps involved in the execution. The structure of this output can be complex but contains valuable information to understand how YugabyteDB processes a query. Let's break down the typical structure of the EXPLAIN ANALYZE output:

- Node Information: Each line of the output represents a node in the query execution plan. The type of node and the operation it performs are typically indicated at the beginning of the line, for example, Seq Scan, Index Scan, Nested Loop, Hash Join, and so on.

- Cost and Row Estimates:

- cost attribute is the estimated cost of the node's operation according to YugabyteDB's query planner. This cost is based on factors like I/O operations, CPU usage, and memory requirements.

- rows attribute is the estimated number of rows that will be processed or returned by the node.

- Actual Execution Statistics:

- time: The actual time taken to execute an operation represented by the node during query execution. This is represented in two parts as T1..T2 with T1 being the time taken to return the first row, and T2 the time taken to return the last row.

- rows: The actual number of rows processed or returned by the node during execution.

- Other Attributes: Some nodes may have additional attributes depending on the operation they perform. For example:

- Filter: Indicates a filtering operation.

- Join Type: Specifies the type of join being performed (for example, Nested Loop, Hash Join, Merge Join).

- Index Name: If applicable, the name of the index being used.

- Sort Key: The key used for sorting if a sort operation is involved.

- Sort Method: The sorting algorithm used (for example, quicksort, mergesort, and so on.)

- Timings: At the end of the plan tree, YugabyteDB will add multiple time taken metrics when the DIST option is specified. These are aggregate times across all plan nodes. Some of them are,

- Planning Time: The time taken in milliseconds for the query planner to design the plan tree. Usually this value is higher the first time a query is executed. During subsequent runs, this time will be low as the plans are cached and re-used.

- Execution Time: The total time taken in milliseconds for the query to execute. This includes all table/index read/write times.

- Storage Read Execution Time: The total time spent by the YSQL query layer waiting to receive data from the DocDB storage layer. This is measured as the sum of wait times for individual RPCs to read tables and indexes. The YSQL query layer often pipelines requests to read data from the storage layer. That is, the query layer may choose to perform other processing while a request to fetch data from the storage layer is in progress. Only when the query layer has completed all such processing does it wait for the response from the storage layer. Thus the wait time spent by the query layer (Storage Read Execution Time) is often lower than the sum of the times for round-trips to read tables and indexes.

- Storage Write Execution Time: The sum of all round-trip times taken to flush the write requests to tables and indexes.

- Catalog Read Execution Time: Time taken to read from the system catalog (typically during planning).

- Storage Execution Time : Sum of Storage Read, Storage Write and Catalog Read execution times.

- Time: The total time taken by the request from the view point of the client as reported by ysqlsh. This includes Planning and Execution times along with the client to server latency.

- Distributed Storage Counters: YugabyteDB adds specific counters related to the distributed execution of the query to the summary when the DIST option is specified. Some of them are:

- Storage Table Read Requests: Number of RPC round-trips made to the local YB-TServer for main table scans.

- Storage Table Rows Scanned: The total number of rows visited in tables to identify the final resultset.

- Storage Table Writes : Number of requests issued to the local YB-TServer to perform inserts/deletes/updates on a non-indexed table.

- Storage Index Rows Scanned: The total number of rows visited in indexes to identify the final result set.

- Storage Index Writes : Number of requests issued to the local YB-TServer to perform inserts/deletes/updates on an index.

- Storage Read Requests: The sum of number of table reads and index reads across all plan nodes.

- Storage Rows Scanned: Sum of Storage Table/Index row counters.

- Storage Write Requests: Sum of all Storage Table Writes and Storage Index Writes.

- Catalog Read Requests: Number of requests to read catalog information from YB-Master.

- Catalog Write Requests: Number of requests to write catalog information to YB-Master.

- Storage Flush Requests: Number of times buffered writes have been flushed to the local YB-TServer.

Using explain

The following examples show how to improve query performance using output of the EXPLAIN ANALYZE. First, set up a local cluster for testing out the examples.

Set up a local cluster

If a local universe is currently running, first destroy it.

Start a local three-node universe with an RF of 3 by first creating a single node, as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.1 \

--base_dir=${HOME}/var/node1 \

--cloud_location=aws.us-east-2.us-east-2a

On macOS, the additional nodes need loopback addresses configured, as follows:

sudo ifconfig lo0 alias 127.0.0.2

sudo ifconfig lo0 alias 127.0.0.3

Next, join more nodes with the previous node as needed. yugabyted automatically applies a replication factor of 3 when a third node is added.

Start the second node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.2 \

--base_dir=${HOME}/var/node2 \

--cloud_location=aws.us-east-2.us-east-2b \

--join=127.0.0.1

Start the third node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.3 \

--base_dir=${HOME}/var/node3 \

--cloud_location=aws.us-east-2.us-east-2c \

--join=127.0.0.1

Set up tables

-

Create a key value table as follows:

CREATE TABLE kvstore ( key VARCHAR, value VARCHAR, PRIMARY KEY(key) ); -

Add 10,000 key-values into the tables as follows:

SELECT setseed(0.5); -- to help generate the same random values INSERT INTO kvstore(key, value) SELECT substr(md5(random()::text), 1, 7), substr(md5(random()::text), 1, 10) FROM generate_series(1,10000); -

Run the ANALYZE command on the database to gather statistics for the query planner to use.

ANALYZE kvstore;

Speed up lookups

Use EXPLAIN on a basic query to fetch a value from the kvstore table.

COSTS OFF option has also been added to show just the estimated costs.

EXPLAIN (ANALYZE, DIST, COSTS OFF) select value from kvstore where key='cafe32c';

You should see an output similar to the following:

1 QUERY PLAN

2-------------------------------------------------------------------------------------

3 Index Scan using kvstore_pkey on kvstore (actual time=1.087..1.090 rows=1 loops=1)

4 Index Cond: ((key)::text = 'cafe32c'::text)

5 Storage Table Read Requests: 1

6 Storage Table Read Execution Time: 0.971 ms

7 Storage Table Rows Scanned: 1

8 Planning Time: 0.063 ms

9 Execution Time: 1.150 ms

10 Storage Read Requests: 1

11 Storage Read Execution Time: 0.971 ms

12 Storage Rows Scanned: 1

13 Storage Execution Time: 0.971 ms

From line 3, you can see that an index scan was done on the table (via Index Scan) using the primary key kvstore_pkey to retrive one row (via rows=1). The Storage Rows Scanned: 1 indicates that as only one row was looked up, this was an optimal execution.

Fetch the key for a given value:

EXPLAIN (ANALYZE, DIST, COSTS OFF) select * from kvstore where value='85d083991d';

You should see an output similar to the following:

1 QUERY PLAN

2-------------------------------------------------------------------

3 Seq Scan on kvstore (actual time=3.719..3.722 rows=1 loops=1)

4 Storage Filter: ((value)::text = '85d083991d'::text)

5 Storage Table Read Requests: 1

6 Storage Table Read Execution Time: 3.623 ms

7 Storage Table Rows Scanned: 10000

8 Planning Time: 1.176 ms

9 Execution Time: 3.771 ms

10 Storage Read Requests: 1

11 Storage Read Execution Time: 3.623 ms

12 Storage Rows Scanned: 10000

13 Catalog Read Requests: 1

14 Catalog Read Execution Time: 0.963 ms

15 Storage Execution Time: 4.586 ms

Immediately from line 3, you can see that this was a sequential scan (via Seq Scan). The actual rows=1 attribute indicates that only one row was returned, but Storage Rows Scanned: 10000 on line 12 indicates that all the 10,000 rows in the table were looked up to find one row. So, the execution time 3.771 ms was higher than the previous query. You can use this information to convert the sequential scan into an index scan by creating an index as follows:

CREATE INDEX idx_value_1 ON kvstore(value);

If you run the same fetch by value query, you should see an output similar to the following:

1# EXPLAIN (ANALYZE, DIST, COSTS OFF) select * from kvstore where value='85d083991d';

2 QUERY PLAN

3-----------------------------------------------------------------------------------

4 Index Scan using idx_value_1 on kvstore (actual time=1.884..1.888 rows=1 loops=1)

5 Index Cond: ((value)::text = '85d083991d'::text)

6 Storage Table Read Requests: 1

7 Storage Table Read Execution Time: 0.861 ms

8 Storage Table Rows Scanned: 1

9 Storage Index Read Requests: 1

10 Storage Index Read Execution Time: 0.867 ms

11 Storage Index Rows Scanned: 1

12 Planning Time: 0.063 ms

13 Execution Time: 1.934 ms

14 Storage Read Requests: 2

15 Storage Read Execution Time: 1.729 ms

16 Storage Rows Scanned: 2

17 Storage Execution Time: 1.729 ms

18 Peak Memory Usage: 24 kB

In this run, Index scan was used and the execution time has been lowered (1.934 ms - 40% improvement). Only one row was returned (via rows=1), but two rows were scanned (Storage Rows Scanned: 2) as one row was looked up from the index (via Storage Index Rows Scanned: 1) and one row was looked up from the table (via Storage Table Rows Scanned: 1). This is because, the executor looked up the row for the matching value: 85d083991d from the index but had to go to the main table to fetch the key, as we have specified SELECT * in our query. Only the values are present in the index and not the keys.

You can avoid this by including the key column in the index as follows:

CREATE INDEX idx_value_2 ON kvstore(value) INCLUDE(key);

If you run the same fetch by value query, you should see an output similar to the following:

1# EXPLAIN (ANALYZE, DIST, COSTS OFF) select * from kvstore where value='85d083991d';

2 QUERY PLAN

3----------------------------------------------------------------------------------------

4 Index Only Scan using idx_value_2 on kvstore (actual time=1.069..1.072 rows=1 loops=1)

5 Index Cond: (value = '85d083991d'::text)

6 Storage Index Read Requests: 1

7 Storage Index Read Execution Time: 0.965 ms

8 Storage Index Rows Scanned: 1

9 Planning Time: 0.074 ms

10 Execution Time: 1.117 ms

11 Storage Read Requests: 1

12 Storage Read Execution Time: 0.965 ms

13 Storage Rows Scanned: 1

14 Storage Execution Time: 0.965 ms

Notice that the operation has become an Index Only Scan instead of the Index Scan as before. Only one row has been scanned (via Storage Rows Scanned: 1) as only the index row was scanned (via Storage Index Rows Scanned: 1). The exection time has now been lowered to 1.117 ms, a 40% improvement over previous execution.

Optimizing for ordering

Fetch all the values starting with a prefix, say ca.

EXPLAIN (ANALYZE, DIST, COSTS OFF) SELECT * FROM kvstore WHERE value LIKE 'ca%' ORDER BY VALUE;

You should see an output similar to the following:

1 QUERY PLAN

2-----------------------------------------------------------------------------

3 Sort (actual time=4.007..4.032 rows=41 loops=1)

4 Sort Key: value

5 Sort Method: quicksort Memory: 28kB

6 -> Seq Scan on kvstore (actual time=3.924..3.957 rows=41 loops=1)

7 Storage Filter: ((value)::text ~~ 'ca%'::text)

8 Storage Table Read Requests: 1

9 Storage Table Read Execution Time: 3.846 ms

10 Storage Table Rows Scanned: 10000

11 Planning Time: 1.119 ms

12 Execution Time: 4.101 ms

13 Storage Read Requests: 1

14 Storage Read Execution Time: 3.846 ms

15 Storage Rows Scanned: 10000

16 Catalog Read Requests: 1

17 Catalog Read Execution Time: 0.877 ms

18 Storage Execution Time: 4.723 ms

Only 41 rows were returned (rows=41) but a sequential scan (Seq Scan on kvstore) was performed on all the rows (Storage Rows Scanned: 10000). Also, the data had to be sorted (via Sort Method: quicksort) on value (via Sort Key: value). You can improve this execution by modifying the above created index into a range index, so that you can avoid the sorting operation, and reduce the number of rows scanned.

CREATE INDEX idx_value_3 ON kvstore(value ASC) INCLUDE(key);

If you run the same query, you should see the following output:

1# EXPLAIN (ANALYZE, DIST, COSTS OFF) SELECT * FROM kvstore WHERE value LIKE 'ca%' ORDER BY VALUE;

2 QUERY PLAN

3-----------------------------------------------------------------------------------------

4 Index Only Scan using idx_value_3 on kvstore (actual time=0.884..0.935 rows=41 loops=1)

5 Index Cond: ((value >= 'ca'::text) AND (value < 'cb'::text))

6 Storage Filter: ((value)::text ~~ 'ca%'::text)

7 Storage Index Read Requests: 1

8 Storage Index Read Execution Time: 0.792 ms

9 Storage Index Rows Scanned: 41

10 Planning Time: 4.290 ms

11 Execution Time: 1.008 ms

12 Storage Read Requests: 1

13 Storage Read Execution Time: 0.792 ms

14 Storage Rows Scanned: 41

15 Catalog Read Execution Time: 3.824 ms

16 Storage Execution Time: 4.616 ms

Now, only 41 rows were scanned (via Storage Rows Scanned: 41) to retrieve 41 rows (via rows=41) and there was no sorting involved as the data is stored sorted in the range index in ASC order. Even though YugabyteDB has optimizations for reverse scans, if most of your queries are going to retrieve the data in DESC order, then it would be better to define the ordering as DESC when creating a range sharded table/index.

Learn more

- Refer to Get query statistics using pg_stat_statements to track planning and execution of all the SQL statements.

- Refer to View live queries with pg_stat_activity to analyze live queries.

- Refer to View COPY progress with pg_stat_progress_copy to track the COPY operation status.

- Refer to Optimize YSQL queries using pg_hint_plan to show the query execution plan generated by YSQL.