Handling zone failures

YugabyteDB is resilient to a single-domain failure in a deployment with a replication factor (RF) of 3. To survive zone failures, you deploy across multiple zones. Let's see how YugabyteDB survives a zone failure.

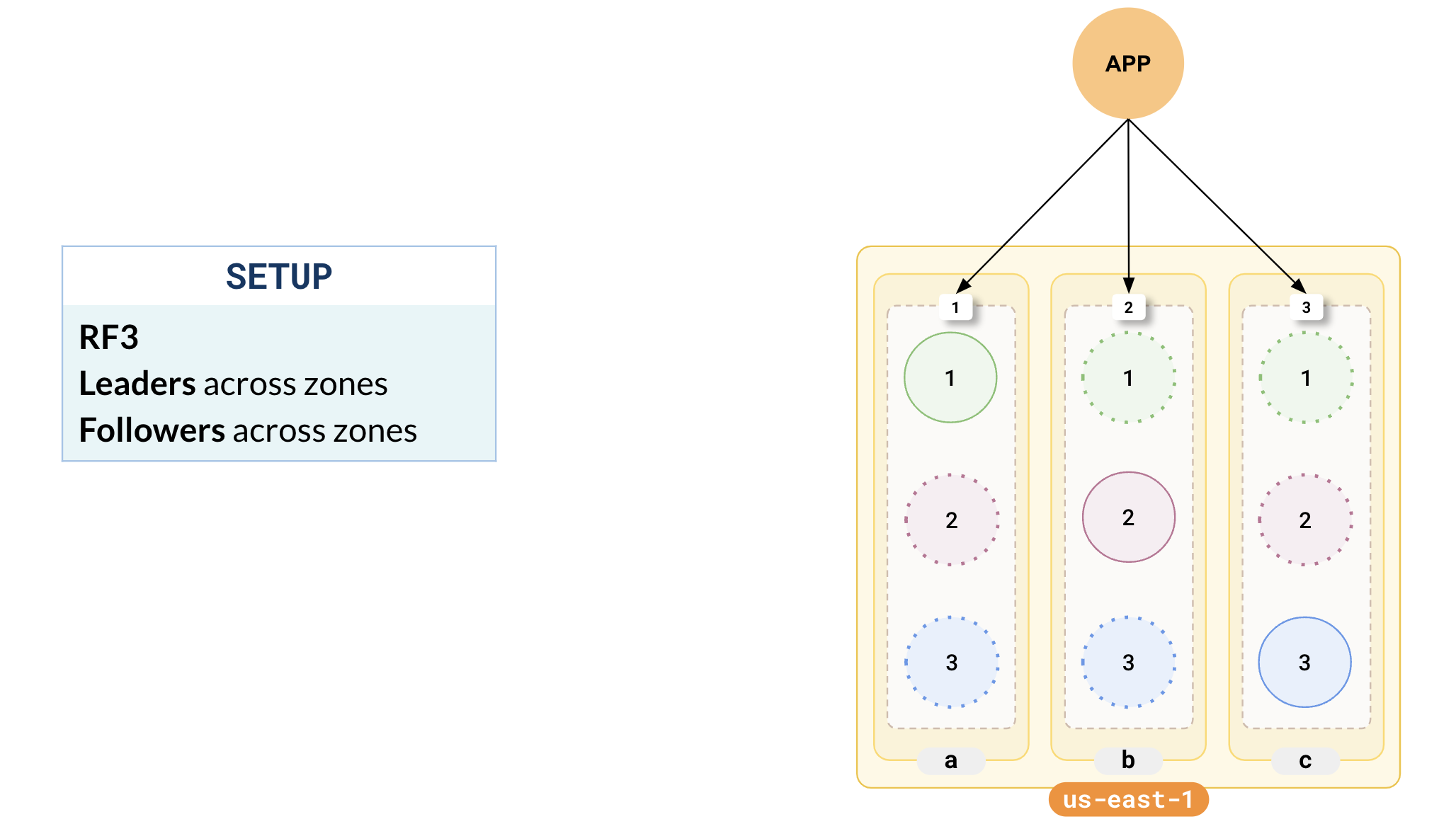

Setup

Consider a setup where YugabyteDB is deployed across three zones in a single region (us-east-1). Say it is an RF 3 cluster with leaders and followers distributed across the 3 zones with 3 tablets (A, B, and C).

Set up a local cluster

If a local universe is currently running, first destroy it.

Start a local three-node universe with an RF of 3 by first creating a single node, as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.1 \

--base_dir=${HOME}/var/node1 \

--cloud_location=aws.us-east.us-east-1a

On macOS, the additional nodes need loopback addresses configured, as follows:

sudo ifconfig lo0 alias 127.0.0.2

sudo ifconfig lo0 alias 127.0.0.3

Next, join more nodes with the previous node as needed. yugabyted automatically applies a replication factor of 3 when a third node is added.

Start the second node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.2 \

--base_dir=${HOME}/var/node2 \

--cloud_location=aws.us-central.us-east-1b \

--join=127.0.0.1

Start the third node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.3 \

--base_dir=${HOME}/var/node3 \

--cloud_location=aws.us-west.us-west-1c \

--join=127.0.0.1

After starting the yugabyted processes on all the nodes, configure the data placement constraint of the universe, as follows:

./bin/yugabyted configure data_placement --base_dir=${HOME}/var/node1 --fault_tolerance=zone

This command can be executed on any node where you already started YugabyteDB.

To check the status of a running multi-node universe, run the following command:

./bin/yugabyted status --base_dir=${HOME}/var/node1

Setup

To set up a universe, refer to Set up a YugabyteDB Anywhere universe.Setup

To set up a cluster, refer to Set up a YugabyteDB Aeon cluster.

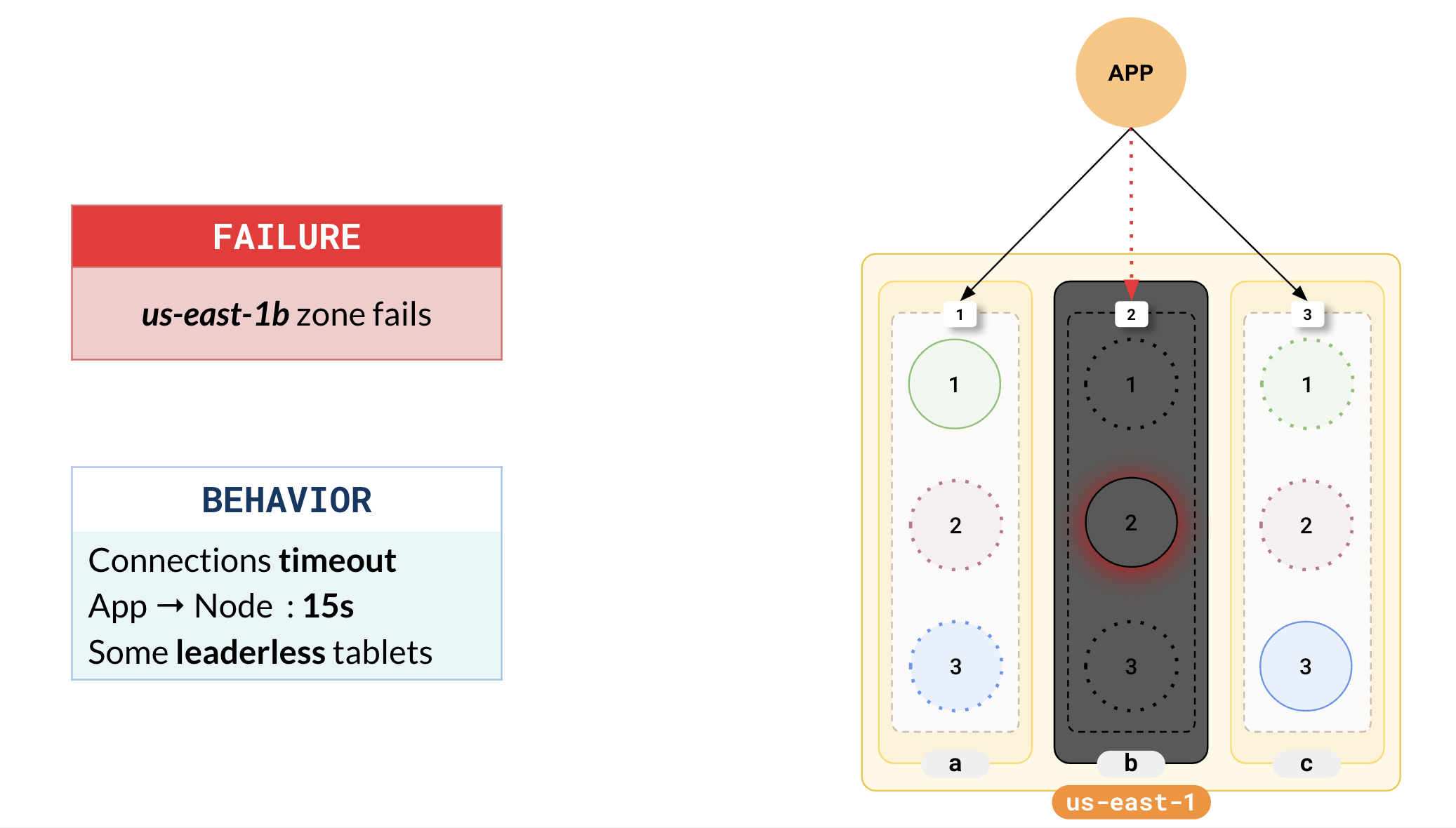

Zone fails

Suppose one of your zones, us-east-1b, fails. In this case, the connections established by your application to the nodes in us-east-1b start timing out (typical timeout is 15s). If new connections are attempted, they will immediately fail, and some tablets will be leaderless. In the following illustration, tablet B has lost its leader.

Simulate failure of a zone locally

To simulate the failure of the 2nd zone locally, you can just stop the second node.

./bin/yugabyted stop --base_dir=${HOME}/var/node2

For example, in the following illustration, tablet B has lost its leader.

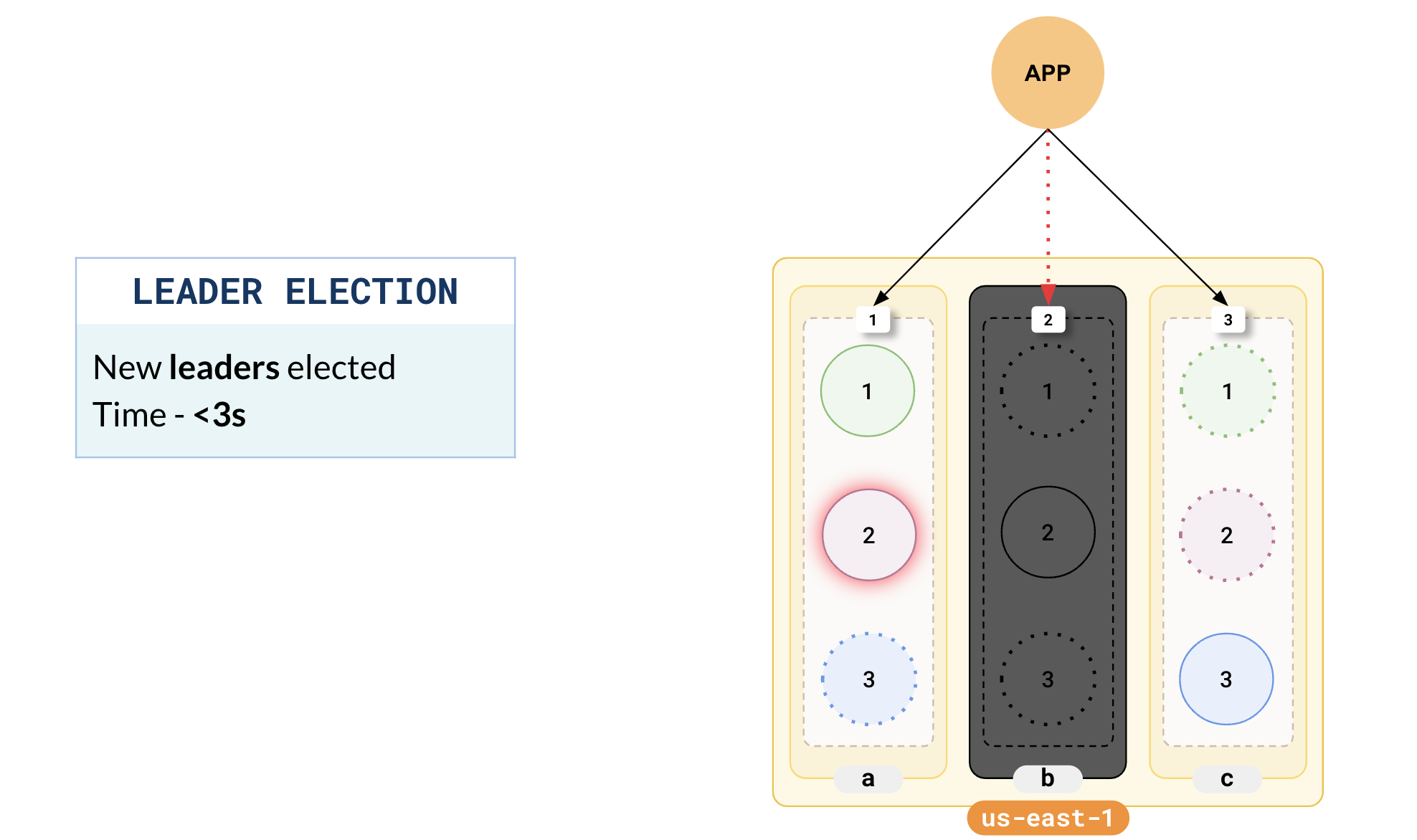

Leader election

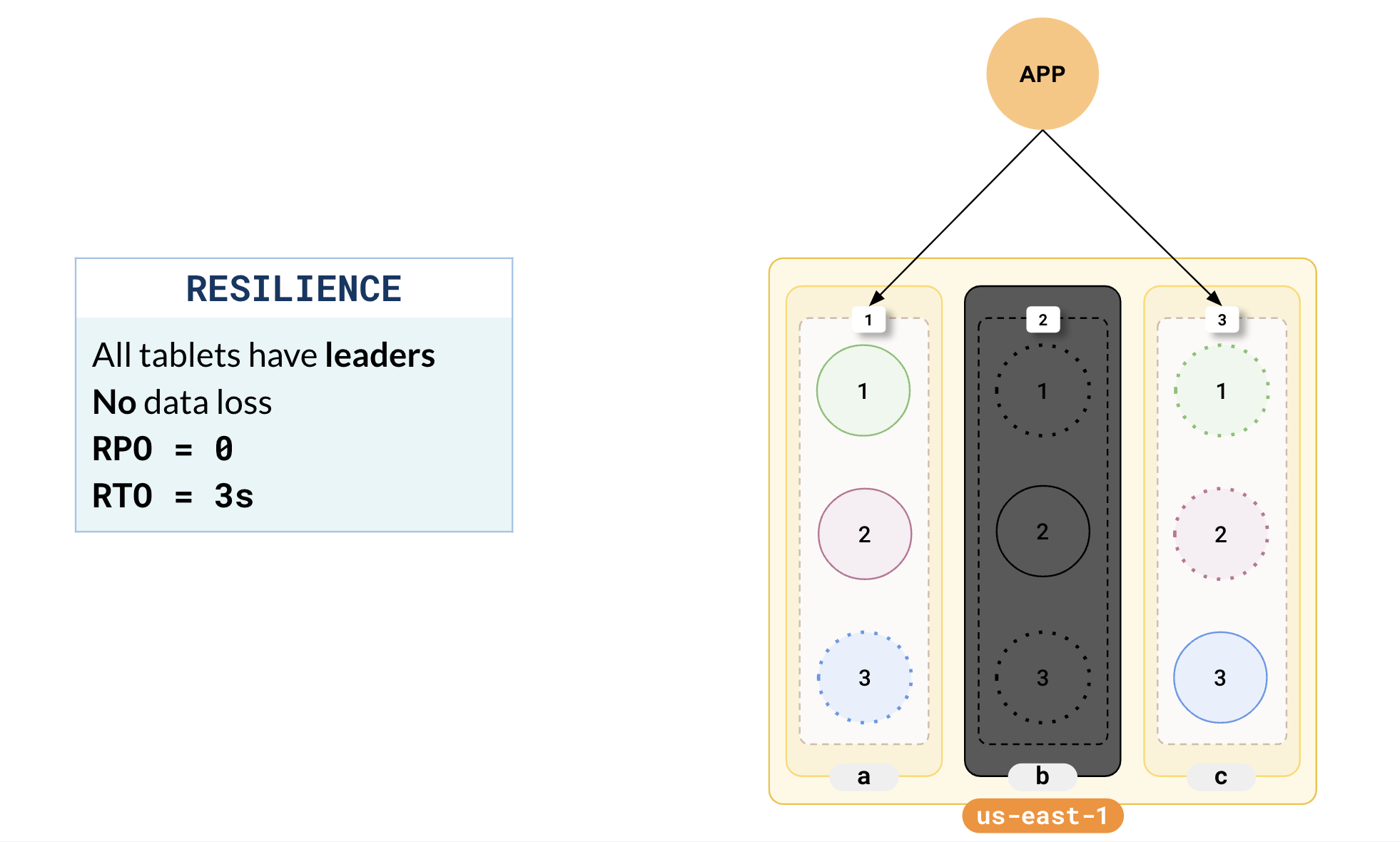

All the nodes in the cluster constantly ping each other for a liveness check. When a node goes offline, it is identified within 3s and a leader election is triggered. This results in the promotion of one of the followers of the offline tablets to leaders. Leader election is very fast and there is no data loss.

In the illustration, you can see that one of the followers of the tablet B leader in zone-a has been elected as the new leader.

Cluster is fully functional

Once new leaders have been elected, there are no leader-less tablets and the cluster becomes fully functional. There is no data loss as the follower that was elected as the leader has the latest data (guaranteed by Raft replication). The recovery time is about 3s. But note that the cluster is now under-replicated because some of the followers are currently offline.

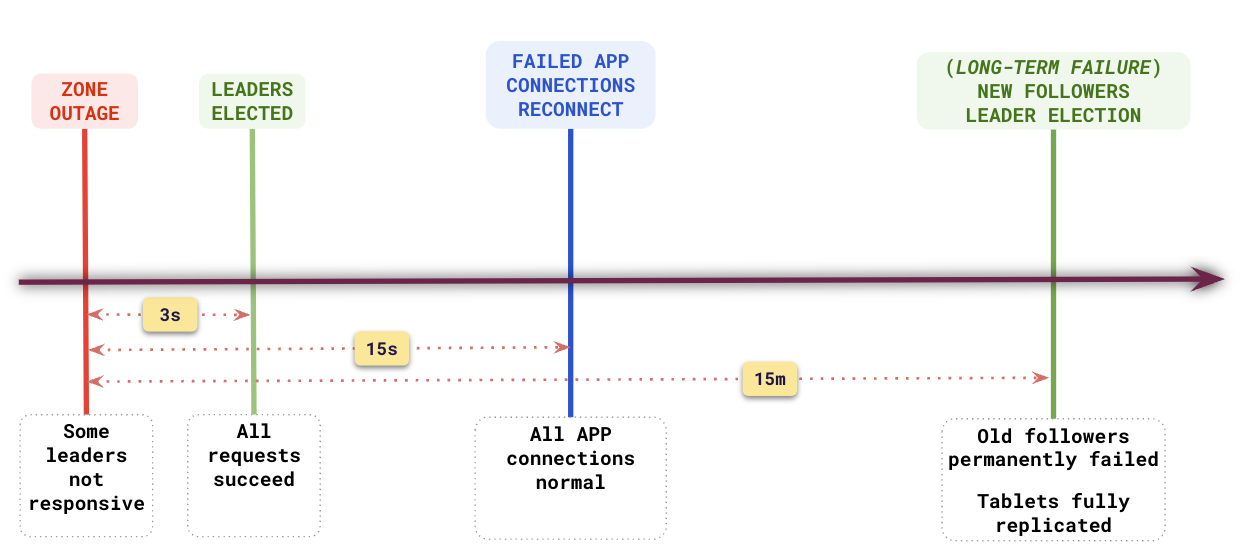

Recovery timeline

From the point a zone outage occurs, it takes about 3s for all requests to succeed as it takes about 3s for the cluster to realize that nodes are offline and complete a leader election. Because of default TCP timeouts, connections already established by applications will take about 15s to fail and on reconnect, they will reconnect to other active nodes.

At this point, the cluster is under-replicated because of the loss of followers. If the failed nodes don't come back online within 15 minutes, the followers are considered failed and new followers will be created to guarantee the replication factor (3). This in essence happens only when the failure of nodes is considered to be a long-term failure.